Nonet at SemEval-2023 Task 6: Methodologies for Legal Evaluation

Dataset Curation and Preprocessing

The study utilized a custom dataset comprising 13,000+ annotated legal documents from Indian courts, split into preamble and judgment sections. Preprocessing involved removing redundant spaces, non-alphanumeric characters, and aligning entity spans after cleaning. For L-NER, 18 entity types (e.g., statute, precedent, court) were annotated, with 80% of data used for training and 20% for validation. Unique challenges included handling lengthy documents (avg. 2,315 words) and aligning subword tokenization with part-of-speech tags for BERT-CRF models.

Innovative Model Fusion for Legal Entity Recognition

A hybrid approach combined spaCy’s RoBERTa transformer with Law2Vec embeddings and a BERT-CRF model enhanced by part-of-speech tags. The spaCy pipeline achieved 85.5% F1 on preamble entities, while BERT-CRF scored 83.7% on judgment entities. Model fusion merged overlapping entity predictions, improving accuracy by 2%. Challenges included sparse entity distributions and misaligned tokenization, resolved through regex-based span correction and POS-tag embeddings.

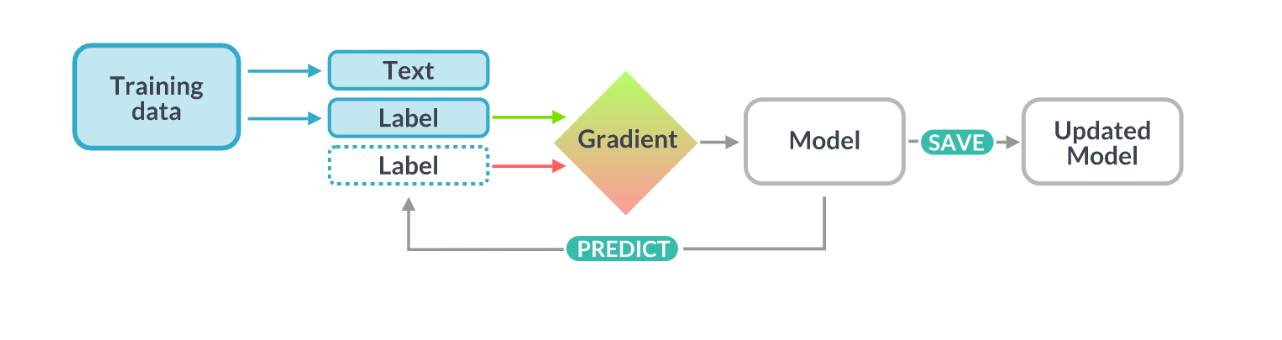

Training workflow for the Nonet

Hierarchical Transformers for Judgment Prediction

Legal documents were processed using XLNet-large and hierarchical transformers with 512-token chunks and 100-token overlaps. Models trained on the last 510 tokens achieved 76.49% F1, outperforming InLegalBERT and RoBERTa. Despite hierarchical architectures (BiGRU + attention) underperforming due to limited data, truncation strategies prioritized context from concluding sections, aligning with findings that critical reasoning appears in final segments.

Explanation Generation for Court Judgments

The research developed AI systems to predict court decisions and explain them in simple terms. By analyzing the concluding sections of judgments (last 300–550 words), where judges summarize key rulings, the models generated explanations matching 47% of human-written justifications. For outcome prediction, phrases like “case disposed of” were used to classify results with 83% accuracy. The system also categorized judgment text into roles like “court’s ruling,” finding 22.8% focused on final decisions. Simpler, expert-validated methods were prioritized over complex techniques to ensure clarity and practicality for real-world legal use.

Evaluation and Results

The models excelled in SemEval-2023 Task 6, achieving 76.49% F1 in Legal Judgment Prediction and 85.5% F1 in Legal NER using fused transformer-based approaches. Explanation extraction via span analysis yielded a ROUGE-2 score of 0.0473, with keyword-based outcome identification reaching 83% accuracy. Despite challenges like sparse entities and lengthy texts, the pipeline ranked 1st in explanation tasks, showcasing scalable solutions for India's judicial system.